When managing data pipelines, there’s this crucial step that can’t be overlooked: defining a PySpark schema upfront. It’s a safeguard to ensure every new batch of data lands consistently. But if you’ve ever wrestled with creating Spark schemas manually, especially for those intricate JSON datasets, you know that it’s challenging and time-consuming. In this post, I’ll unpack the significance of upfront schema definition, touch on the roadblocks of manual methods, and showcase how ChatGPT can drastically speed up and automate the process.

Why is Defining a Schema Upfront Important?

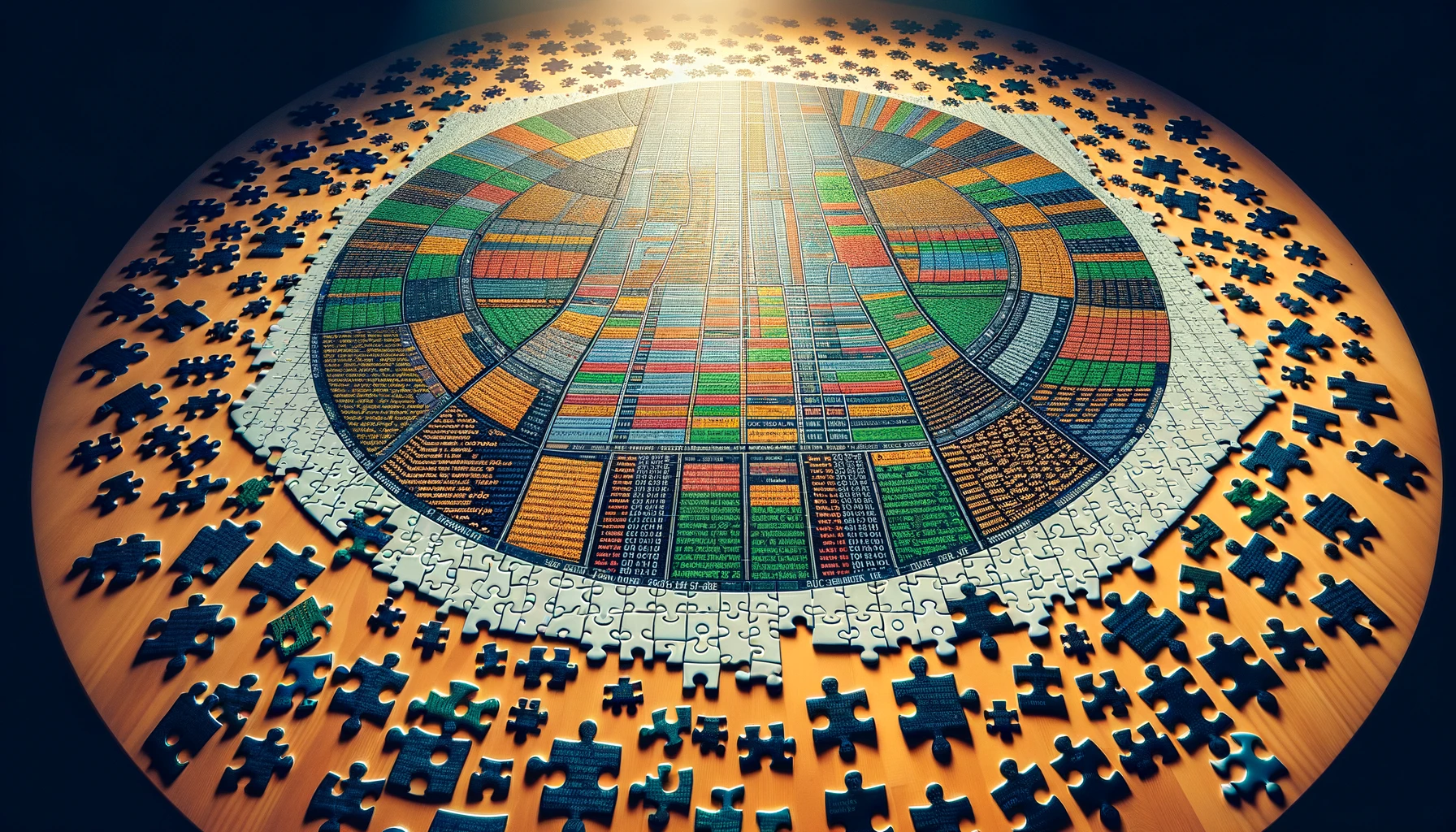

Setting up a schema before deploying a data pipeline isn’t just a good idea—it’s vital. In the context of data engineering, think of it like assembling a massive jigsaw puzzle: countless data files merge to form what appears to be one cohesive database table. Data is continuously transferred from source to destination systems. If we don’t have a consistent schema, new data pieces might not fit, leading to potential errors. Let’s break down why this is so crucial:

- Performance Boost:

- Consistency: With a defined schema, every data point knows its place. There’s no ambiguity, ensuring that data is processed faster because Spark isn’t left guessing about the data’s structure.

- Reduced Overhead: Without the need for Spark to infer the schema each time new data arrives, there’s a significant reduction in processing overhead. This not only saves time but also computational resources.

- Data Accuracy:

- Explicit Data Types: By defining the data types upfront, you’re ensuring that every piece of data adheres to the expected format. This means no unexpected type mismatches or erroneous conversions.

- Data Integrity: A defined schema acts as a gatekeeper, ensuring that only the data fitting the schema’s criteria is processed, reducing the chances of data inconsistencies or anomalies.

- Maintainability:

- Streamlined Debugging: When something goes amiss, having a defined schema makes it easier to pinpoint the issue. Instead of sifting through the entire dataset, you can focus on the schema’s specific areas.

- Documentation: A schema, in essence, also serves as documentation. It provides a clear snapshot of what the data should look like, making it easier for teams to collaborate and onboard new members.

- Scalability:

- Uniformity: As your data operations grow and you integrate more datasets, a predefined schema ensures that all data, old or new, follows the same structure. This uniformity is key to seamlessly scaling your data pipelines.

Defining a schema upfront might seem like a preparatory step, one that could be postponed. However, in the grand scheme of things, it’s a foundational block that ensures your data operations run smoothly, accurately, and efficiently.

The Challenges of Manually Creating a Schema

- Time-Consuming:

- Diverse Data Types: Different data types, nested structures, and variability in fields mean that manually defining each aspect can be a lengthy process.

- Iterative Process: Often, it’s not about just setting it once and forgetting it. As new nuances in the data emerge, the schema may need adjustments, adding to the time spent on schema definition.

- Potential for Human Error:

- Mistyped Fields: A small typo can cause significant discrepancies in data processing, leading to inaccuracies or even pipeline failures.

- Oversights: It’s not uncommon to overlook certain nested structures or optional fields, especially in large datasets, which can impact the reliability of the data pipeline.

- Complexity Management:

- Nested Structures: JSON data, in particular, can have deeply nested structures. Manually creating a schema for such data requires careful navigation through each level, ensuring nothing is missed.

- Varying Fields: Sometimes, datasets have fields that may or may not be present in every entry. Accounting for these variations manually can be challenging.

- The Perils of Schema Inference:

- Inconsistencies Across Batches: Relying on Spark to infer the schema for every new data batch is risky. Variations in data, such as missing fields, can result in Spark inferring a different schema than previous batches. This inconsistency can lead to unexpected results or failures.

- Performance Overhead: Schema inference isn’t free; it demands additional computational resources. Regularly inferring schemas, especially for large datasets, can hamper performance.

While manually creating a schema provides a sense of hands-on control, it’s clear that it’s not without its pitfalls. In an era where efficiency and accuracy are paramount, there’s a growing need for tools and solutions that can simplify this process, ensuring data pipelines remain robust and reliable.

Harnessing ChatGPT for PySpark Schema Generation

With ChatGPT, generating a Spark Schema could not be easier. Simply dump your JSON and ask ChatGPT to generate a PySpark schema for the JSON data. Here’s an example of JSON you can give ChatGPT:

{

"user_id": 12345,

"name": "John Doe",

"email": "john.doe@example.com",

"address": {

"street": "123 Elm St",

"city": "Metropolis",

"zipcode": "12345"

},

"subscription_status": "active",

"last_login": "2023-10-20"

}

And here’s the output:

from pyspark.sql.types import StructType, StructField, IntegerType, StringType, DateType

userSchema = StructType([

StructField("user_id", IntegerType(), True),

StructField("name", StringType(), True),

StructField("email", StringType(), True),

StructField("address", StructType([

StructField("street", StringType(), True),

StructField("city", StringType(), True),

StructField("zipcode", StringType(), True)

]), True),

StructField("subscription_status", StringType(), True),

StructField("last_login", DateType(), True) # Assumes "last_login" is in the "YYYY-MM-DD" format

])

The beauty of ChatGPT lies in its simplicity and power. By translating complex data structures into usable schemas, it minimizes the time and effort traditionally required in this phase of data pipeline creation. As with any tool, it’s essential to understand its capabilities and best practices to maximize its potential fully.

Final Thoughts

Defining an upfront schema is crucial for the integrity of Spark data pipelines. While manual schema creation has its place and schema inference brings its own set of challenges, automation, especially with tools like ChatGPT, offers great efficiency. This streamlined approach saves time and ensures consistent data processing.

Thanks for reading!